Table of Contents

Introduction

When NVIDIA initially announced the GeForce RTX 40 Series in September, they talked about three specific models: the RTX 4090 24GB, RTX 4080 16GB, and RTX 4080 12GB. The RTX 4090 RTX 4090 24GB was available in October of 2022 and quickly followed by the RTX 4080 16GB the next month. However, the RTX 4080 12GB has had a rocky road, including being delayed, “un-launched”, and rebranded as the RTX 4070 Ti 12GB.

Although delayed well past the initial November target launch date, the RTX 4070 Ti 12GB is now available, completing the trio of GPUs NVIDIA announced four months ago. One bonus to this extended launch is that NVIDIA has reduced the MSRP of the RTX 4070 Ti from the initial $899 to $799. But one of the downsides to the RTX 4070 Ti is that NVIDIA is not making their own in-house “Founders Edition” model for the RTX 4070 Ti. This means that the MSRP is likely to be an even more loose “suggestion” than normal, as NVIDIA cannot influence pricing from third-party manufacturers like ASUS, MSI, and PNY using their own Founders Edition model.

Despite the prolonged launch, the RTX 4070 Ti nicely rounds out the RTX 40 Series, giving users a 12GB, 16GB, and 24GB model to choose from, with prices ranging from $799 to $1,599. This is still a hefty entry price for many users, but we fully expect lower-end 40 series cards to come in the future that will provide a lower entry point.

If you want to see the full specs for the GeForce RTX 40 Series GPUs, we recommend checking out the NVIDIA GeForce RTX 40 Series product page. But at a glance, here are what we consider to be the most critical specs alongside a range of other cards:

| GPU | VRAM | Cores | Boost Clock | Power | MSRP |

|---|---|---|---|---|---|

| RTX 3060 | 12GB | 3,584 | 1.75 GHz | 170W | $329 |

| RTX 3060 Ti | 8GB | 4,864 | 1.67 GHz | 200W | $399 |

| RTX 3070 | 8GB | 5,888 | 1.70 GHz | 200W | $499 |

| RTX 3070 Ti | 8GB | 6,144 | 1.77 GHz | 290W | $599 |

| RTX 3080 | 10GB | 8,704 | 1.71 GHz | 320W | $699 |

| RTX 4070 Ti | 12GB | 7,680 | 2.61 GHz | 285W | $799 |

| Radeon 7900 XT | 20GB | 5,376 | 2.4 GHz | 315W | $899 |

| Radeon 6900 XT | 16GB | 5,120 | 2.25 GHz | 300W | $999 |

| Radeon 7900 XTX | 24GB | 6,144 | 2.5 GHz | 355W | $999 |

| RTX 3080 Ti | 12GB | 10,240 | 1.67 GHz | 350W | $1,199 |

| RTX 4080 | 16GB | 9,725 | 2.51 GHz | 320W | $1,199 |

| RTX 3090 | 24GB | 10,496 | 1.73 GHz | 350W | $1,499 |

| RTX 4090 | 24GB | 16,384 | 2.52 GHz | 450W | $1,599 |

| RTX 3090 Ti | 24GB | 10,752 | 1.86 GHz | 450W | $1,999 |

Regarding MSRP, the RTX 4070 Ti slots above the RTX 3080 and a bit behind both the RTX 3080 Ti and the AMD Radeon RX 7900XT. This is very similar to the RTX 4080, which is more expensive than the previous generation card of the same “level” (in terms of naming). However, we will point out that you shouldn’t compare these GPUs based on the model name, but rather in terms of VRAM and straight performance. You should often look at one model “up” the previous generation’s stack for head-to-head comparisons.

The RTX 4090 24GB is also a bit more expensive than the previous generation RTX 3090, but in terms of capability, it should primarily be compared to the RTX 3090 Ti, which is $400 less expensive than the previous generation.

Test Setup

Test Platform

Benchmark Software

| DaVinci Resolve 18.1.2 PugetBench for DaVinci Resolve 0.93.2 |

| Premiere Pro 23.1.0 PugetBench for Premiere Pro 0.95.6 |

| Topaz AI Suite (Video AI 3.0.11.0, Gigapixel AI 6.2.2, DeNoise AI 3.7.0, Sharpen AI 4.1.0) |

| Unreal Engine 4.26 |

| OctaneBench 2020.1.5 |

| Blender 3.4.0 |

| V-Ray 5.02.00 |

| RedShift 3.5.08 |

To see how the NVIDIA GeForce RTX 40 Series GPUs perform, we will compare them to the previous generation RTX 30 Series and the older GeForce RTX 2080 Ti for additional context. We will also include the top GPUs from Intel and AMD – the Intel Arc A770, and the AMD Radeon RX 7900 XTX. The test system we will be using is one of the fastest platforms currently available for most of the applications we are testing and is built around the AMD Threadripper Pro 5975WX to minimize any potential CPU bottlenecks.

We will use many of our PugetBench series of benchmarks that can take advantage of more powerful GPUs. Most of these benchmarks include the ability to upload the results to our online database, so if you want to know how your system compares, you can download and run the benchmark yourself. Several GPU rendering benchmarks supplement our testing to show off the GPU rendering performance of these cards.

Video Editing: DaVinci Resolve Studio

For our first look at the NVIDIA GeForce RTX 40 Series cards, we will examine performance in DaVinci Resolve Studio. More so than any other NLE (Non-Linear Editor) currently on the market, Resolve can make terrific use of high-end GPUs and even multi-GPU setups. The four main areas the GPU is used for are processing GPU effects, H.264/HEVC decoding/encoding, and debayering (and optionally decoding) RAW media.

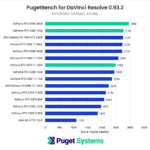

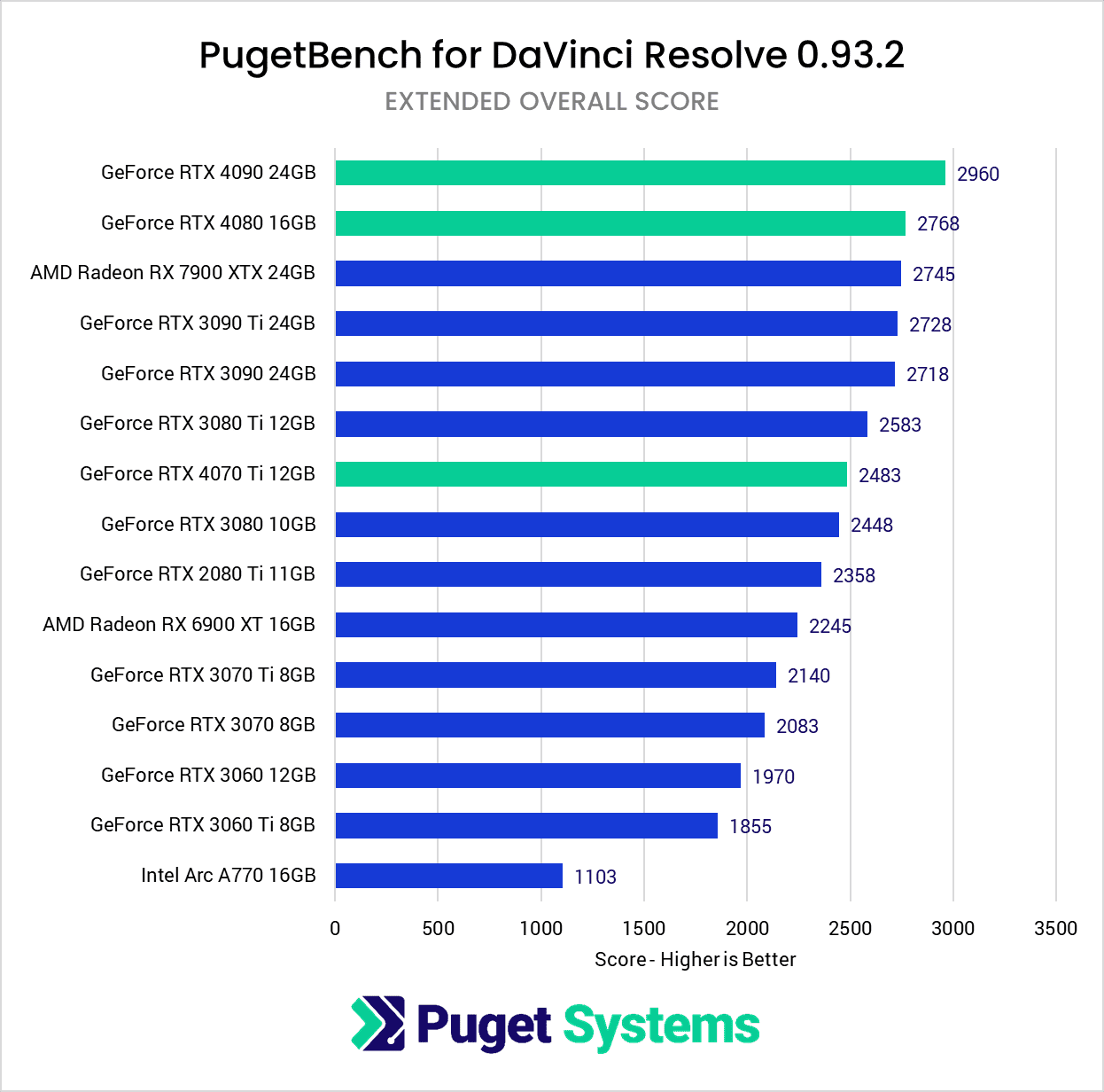

To start, we want to look at the Overall Score, which combines everything we test for Resolve. This is often a good indicator of what kind of relative performance a “typical” (if such a thing exists) Resolve user may see with different hardware but is less focused on the GPU as it includes many tasks that are more CPU focused. In terms of overall performance, the GeForce RTX 4090 does particularly well, topping our charts and beating the next fastest GPU by about 7%. The RTX 4080 also does well but only matches the (albeit more expensive) previous generation RTX 3090/3090 Ti, as well as the (slightly less expensive) AMD Radeon RX 7900 XTX.

The GeForce RTX 4070 Ti is disappointing, as it only matches the similarly priced RTX 3080 from the previous generation. It does have 2GB more VRAM, but from a price/performance standpoint, it is no better than the previous generation.

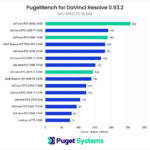

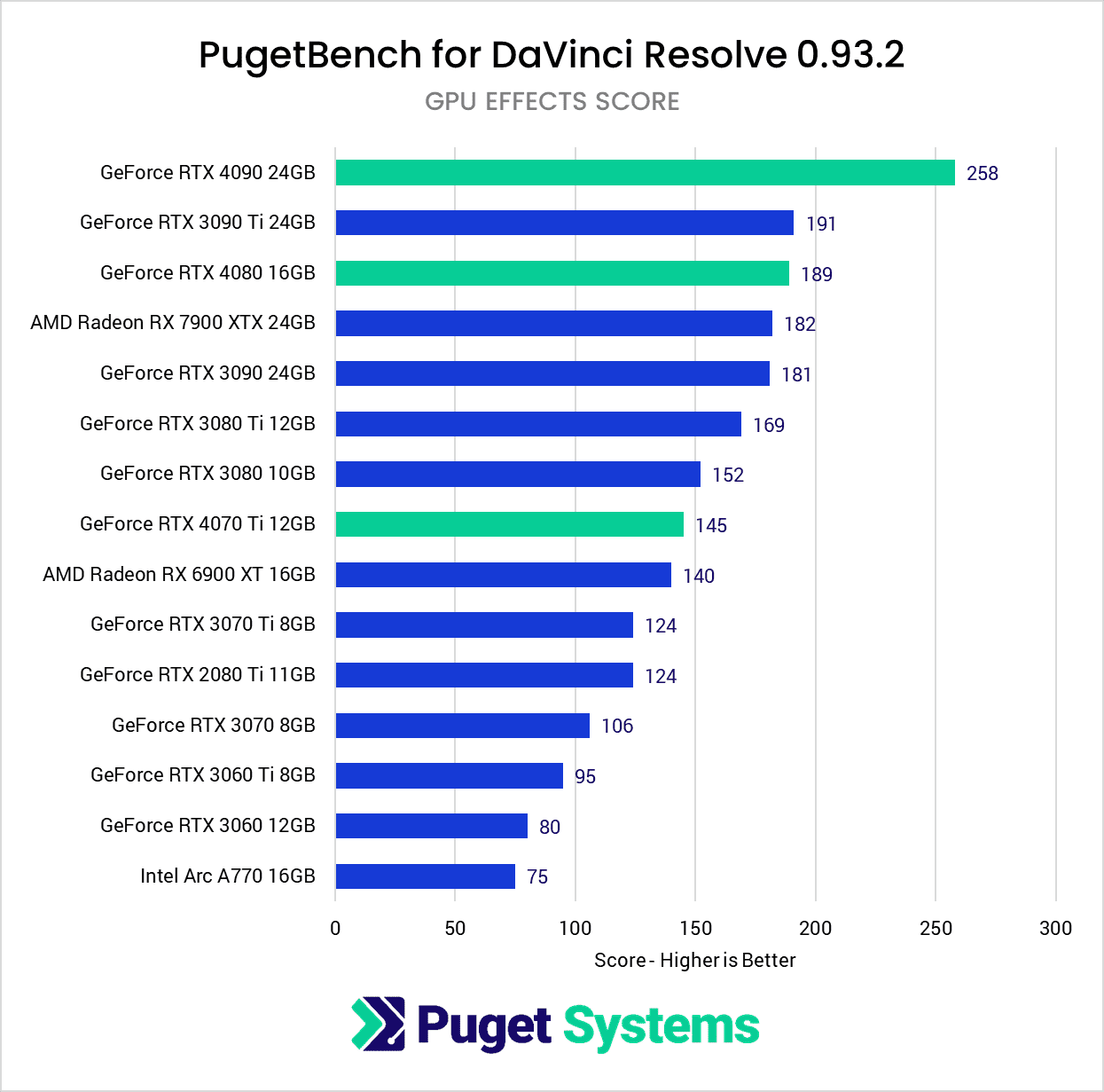

This largely holds true for the “GPU Effects” portion of our benchmark (chart #2), only with the RTX 4090 extending its lead over the next fastest GPU to a solid 35%. This makes it a solid 43% faster than the similarly priced RTX 3090 from the previous generation, which is an excellent gen-over-gen performance gain. The RTX 4080 is faster than the previous generation as well, but it only beats the RTX 3080 Ti by a smaller 12%.

Once again, the RTX 4070 Ti is the outlier here, as it is about 5% slower than the RTX 3080. Remember that the RTX 4070 Ti is priced with an MSRP of $100 higher than the RTX 3080. In other words, it appears that the RTX 4070 Ti has something about it that makes it a worse value than the previous generation for this test.

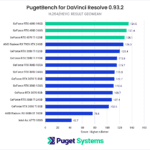

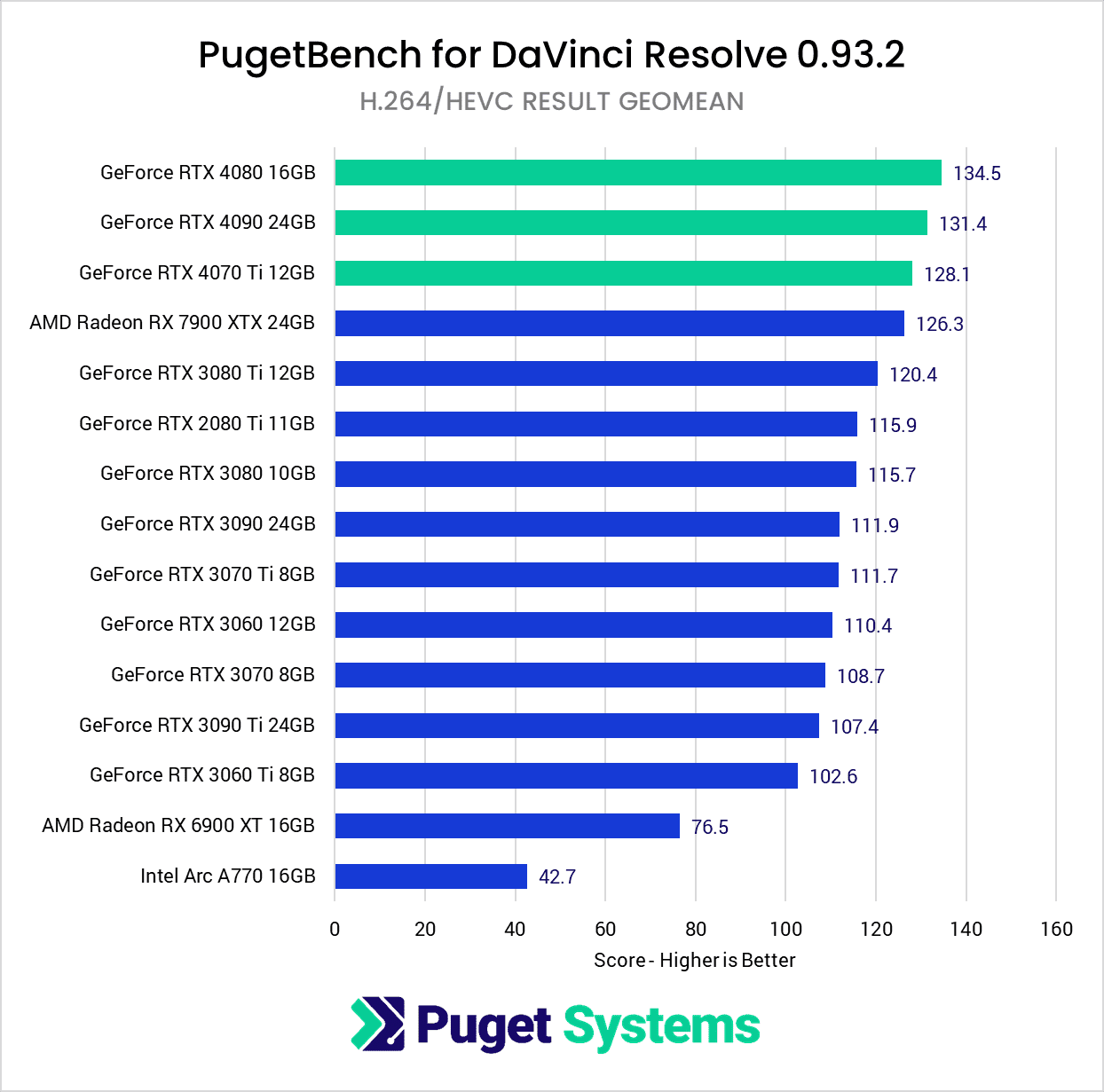

Moving on to chart #3, we wanted to pull out the H.264/HEVC tests that we were exporting to DNxHR (which has no hardware encoding support). There is no easy way to test pure decoding performance in a vacuum since you always have to encode or otherwise display the media, but this should be a good indicator of how each GPU handles hardware decoding of H.264 and HEVC media. Here, RTX 40 Series top the chart, with the 4090, 4080, and 4070 Ti coming in first, second, and third place. The AMD Radeon 7900 XTX takes the fourth spot, but we want to point out that the difference between these top four cards is only about 7%. In other words, you are unlikely to notice the difference in the real world, so this single result likely shouldn’t influence your purchasing decision between NVIDIA and AMD.

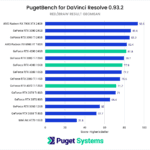

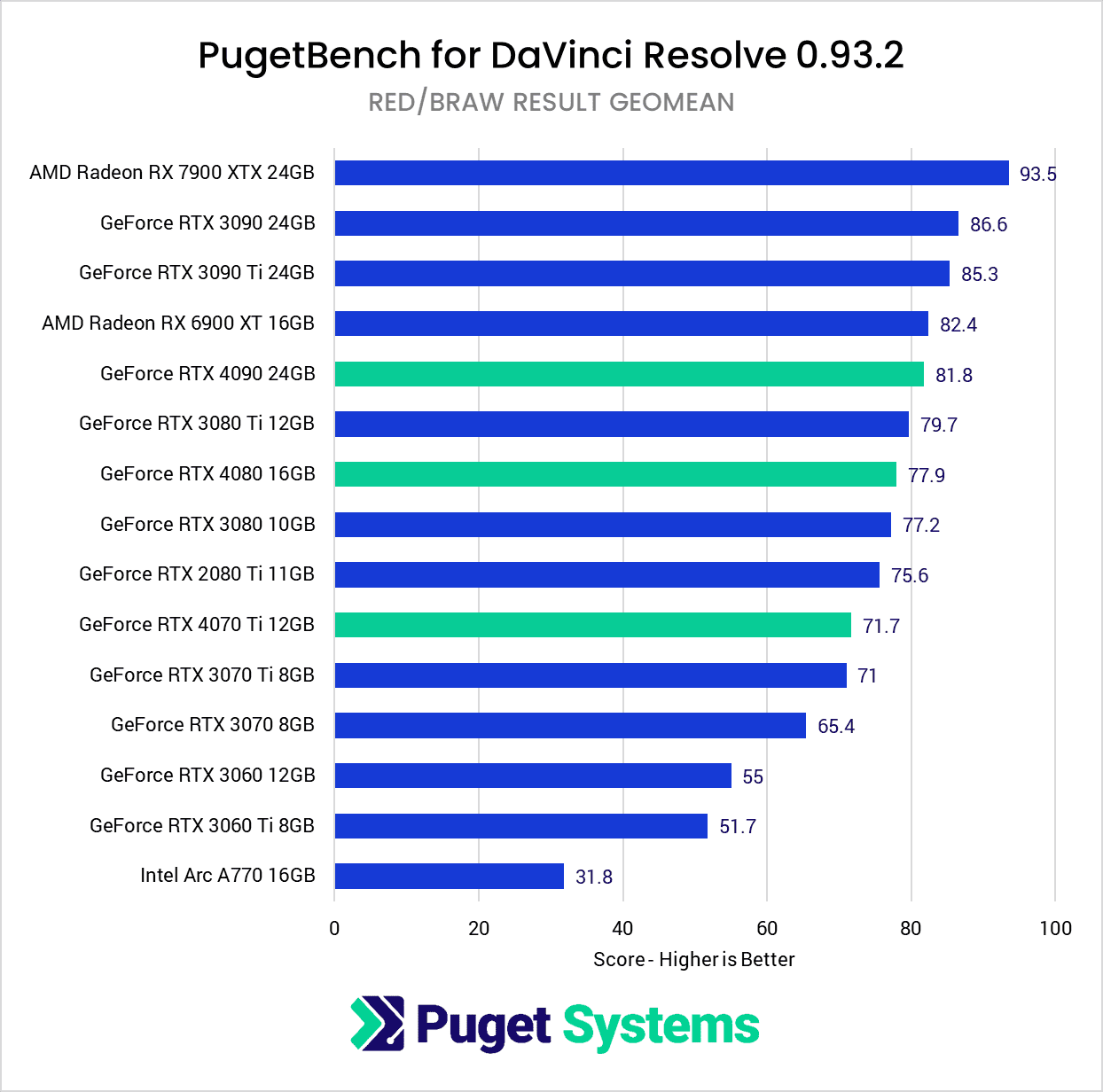

The final area we wanted to look at is RAW debayering for RED and BRAW footage (chart #4). These tests were a big surprise to us, as the RTX 40 Series show some issues compared to the previous generation. The difference isn’t massive, but the new GPUs tend to be about 2-8% slower than the RTX 30 Series. Given how close many of these GPUs perform for this task, that allows AMD to take the firm lead for this task, with the Radeon 7900 XTX beating even the RTX 4090 by a solid 14%. This issue with RAW footage is likely due to a driver or application bug and will likely be resolved in the future, but either way, the 7900 XTX is a solid GPU for RAW workflows in DaVinci Resolve.

Overall, the RTX 4090 we would classify as excellent for DaVinci Resolve largely due to its terrific performance with GPU Effects. The RTX 4080 is an improvement over the previous generation, but often only by a relatively small 10%. The RTX 4070 Ti is the one card that isn’t great. It overall is no worse than the previous generation, but it also isn’t that much faster in most cases.

Full Analysis: DaVinci Resolve Studio: NVIDIA GeForce RTX 40 Series Performance

Video Editing: Adobe Premiere Pro

Adobe Premiere Pro may not utilize the GPU quite as much as DaVinci Resolve (and effectively doesn’t take advantage of multi-GPU setups at all), but having a strong GPU can still make an impact, depending on your workflow.

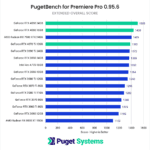

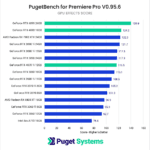

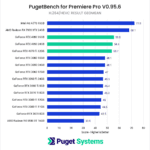

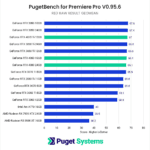

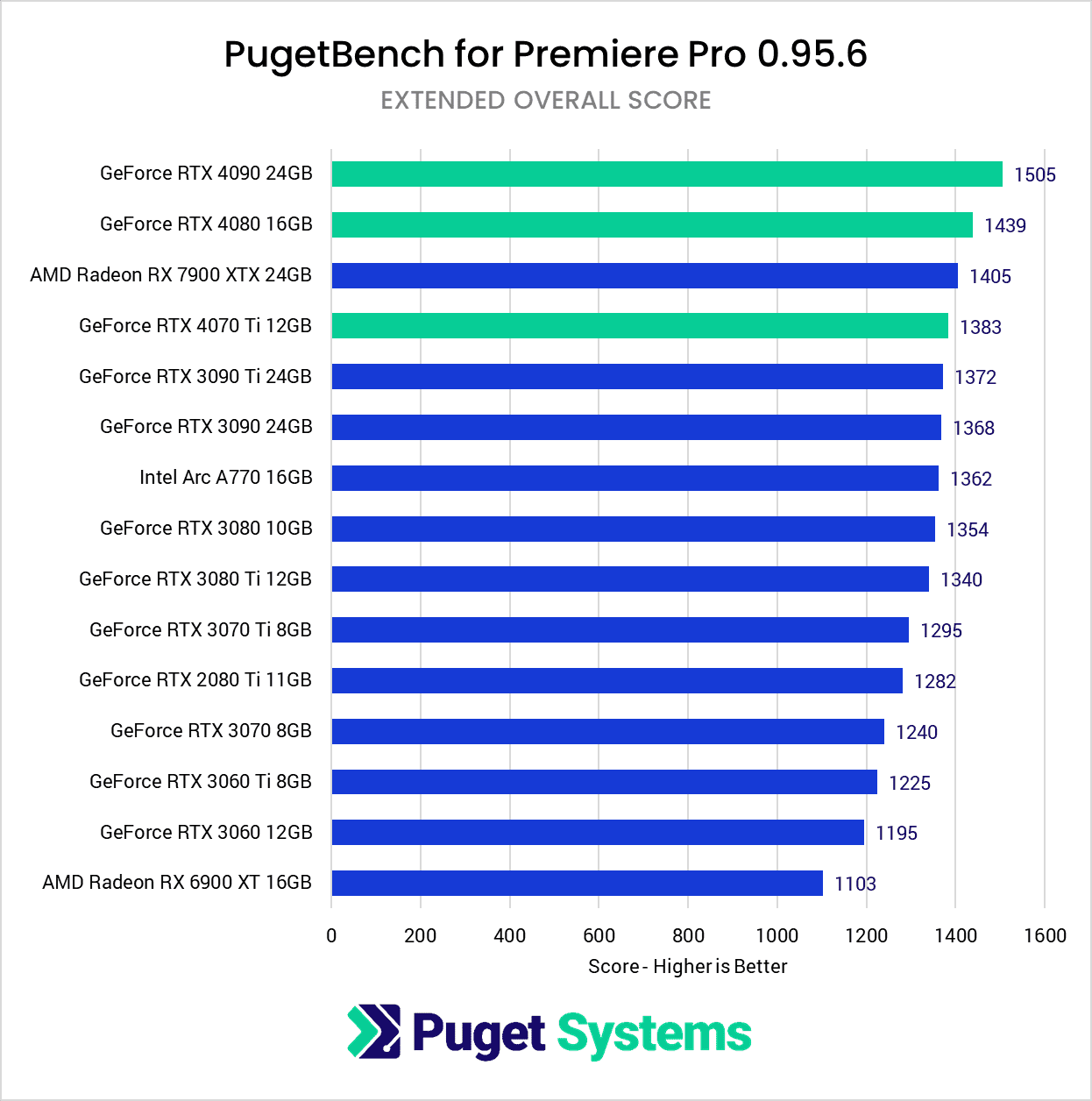

Once again, we will start with the Overall Score from our benchmark, which shows the RTX 40 Series cards taking the first, second, and fourth place in our chart. The AMD Radeon 7900 XTX comes in at spot #3, which makes these four GPUs all score almost exactly in line with their MSRP. However, just like with DaVinci Resolve, we want to dive in a little closer since there are some areas where the RTX 40 Series cards are great and others where they aren’t quite so hot.

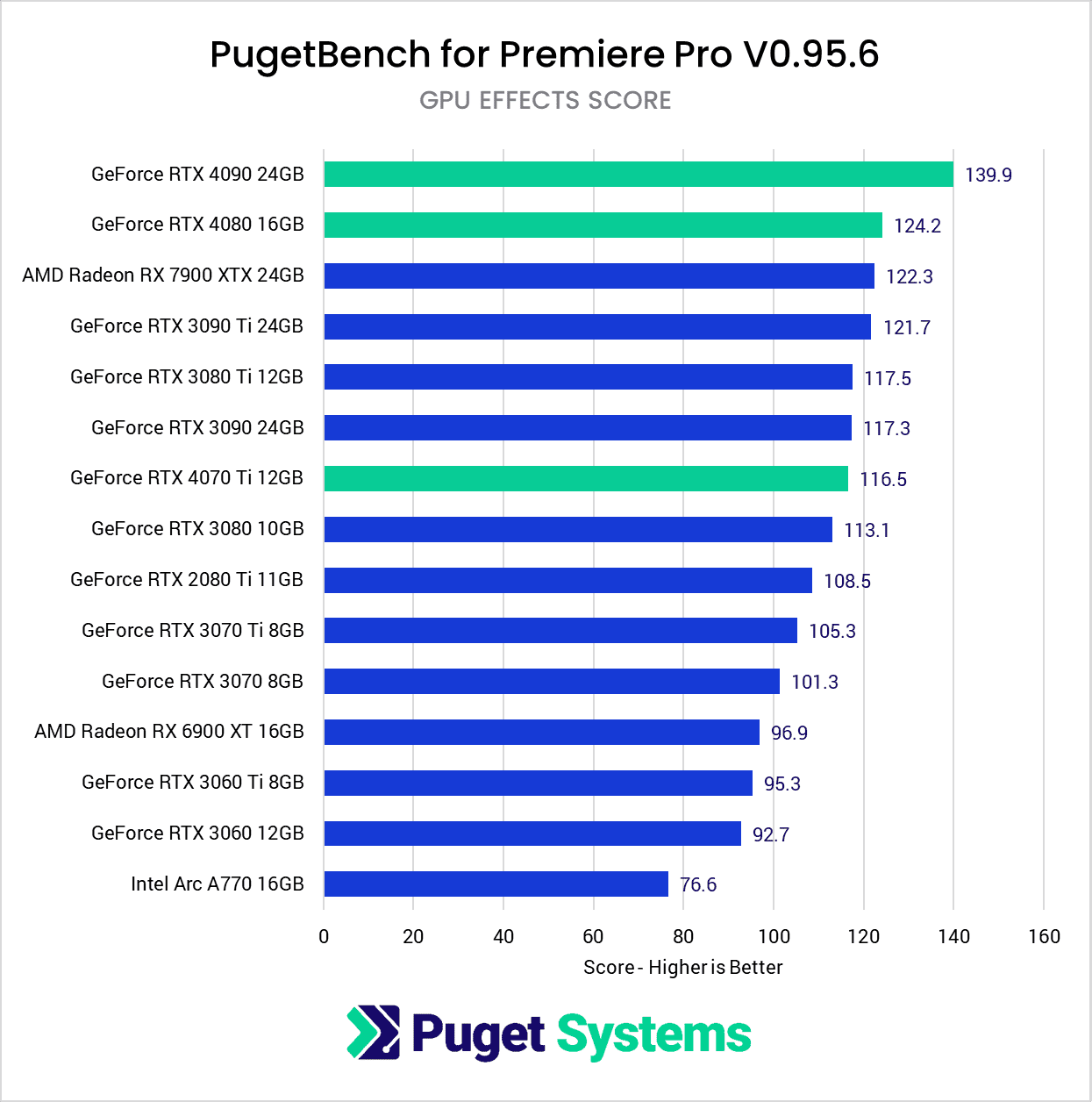

One area where a more powerful GPU can often give you a boost is for GPU-accelerated effects like Lumetri Color, most blurs, etc. (chart #2). For these tasks, the RTX 40 Series do very well. The RTX 4090 beats the RTX 3090 by a solid 10%, while the RTX 4080 is a smaller 7% faster than the RTX 3080 Ti. The RTX 4070 Ti doesn’t fare quite as well but still manages to sneak past the previous generation RTX 3080.

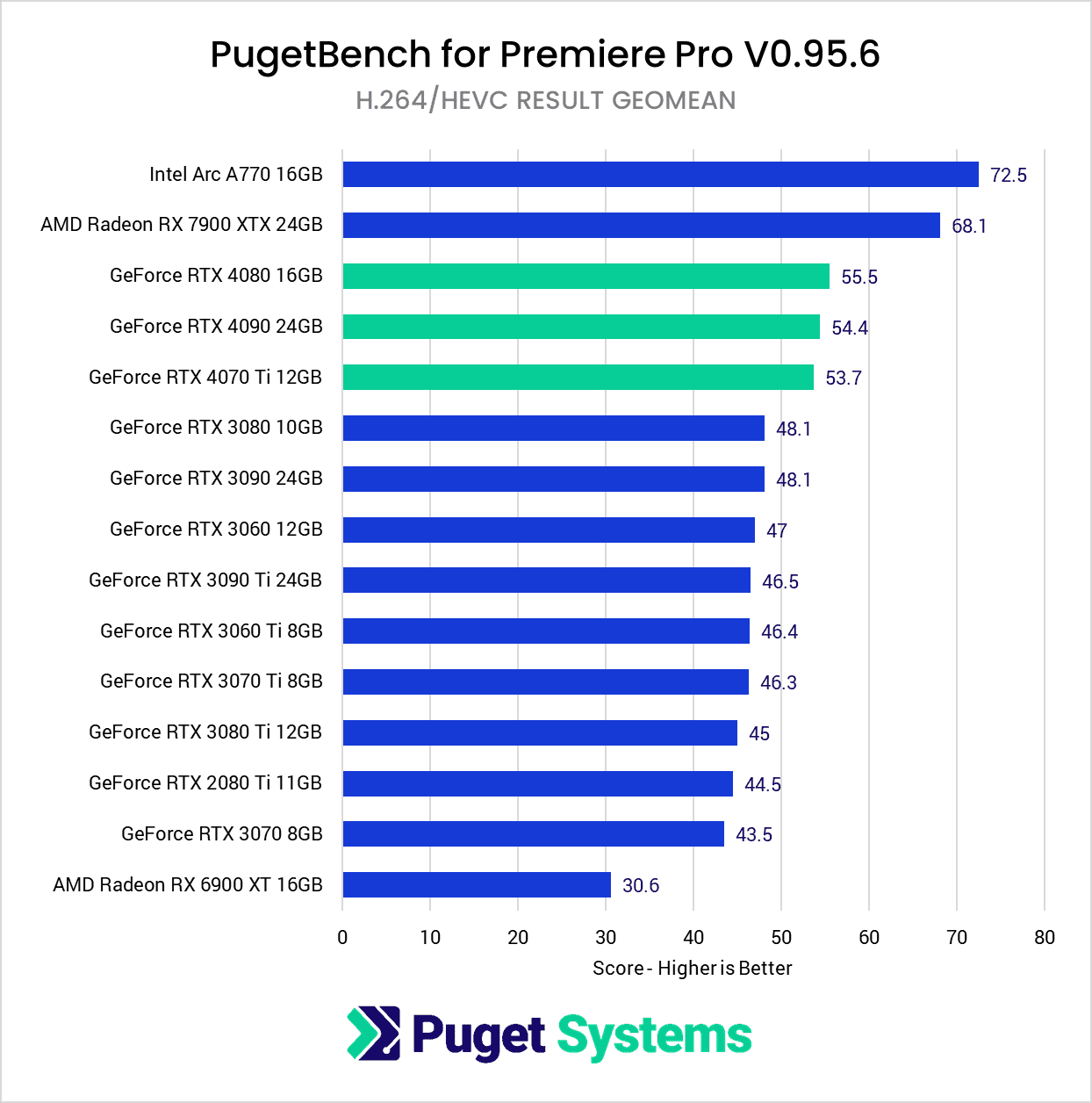

On chart #3, we are again looking at the geometric mean of the H.264 and HEVC results. This was an area where Intel and AMD do very well in Premiere Pro, resulting in the RTX 40 Series cards all trailing far behind. The Intel Arc A770 is actually at the top of this chart, coming in at 30% faster than the RTX 40 Series. The AMD Radeon 7900 XTX isn’t quite as strong, but it is still 23% faster than the RTX 40 Series. To be fair to NVIDIA, you could make up for their deficit in this area if you use an Intel Core CPU that supports Quick Sync (as that would be used for H.264/HEVC decoding in place of the GPU), but if that isn’t an option, Intel (and to a smaller degree, AMD) are a stronger choice for any longGOP codecs that Premiere Pro has GPU decoding support for.

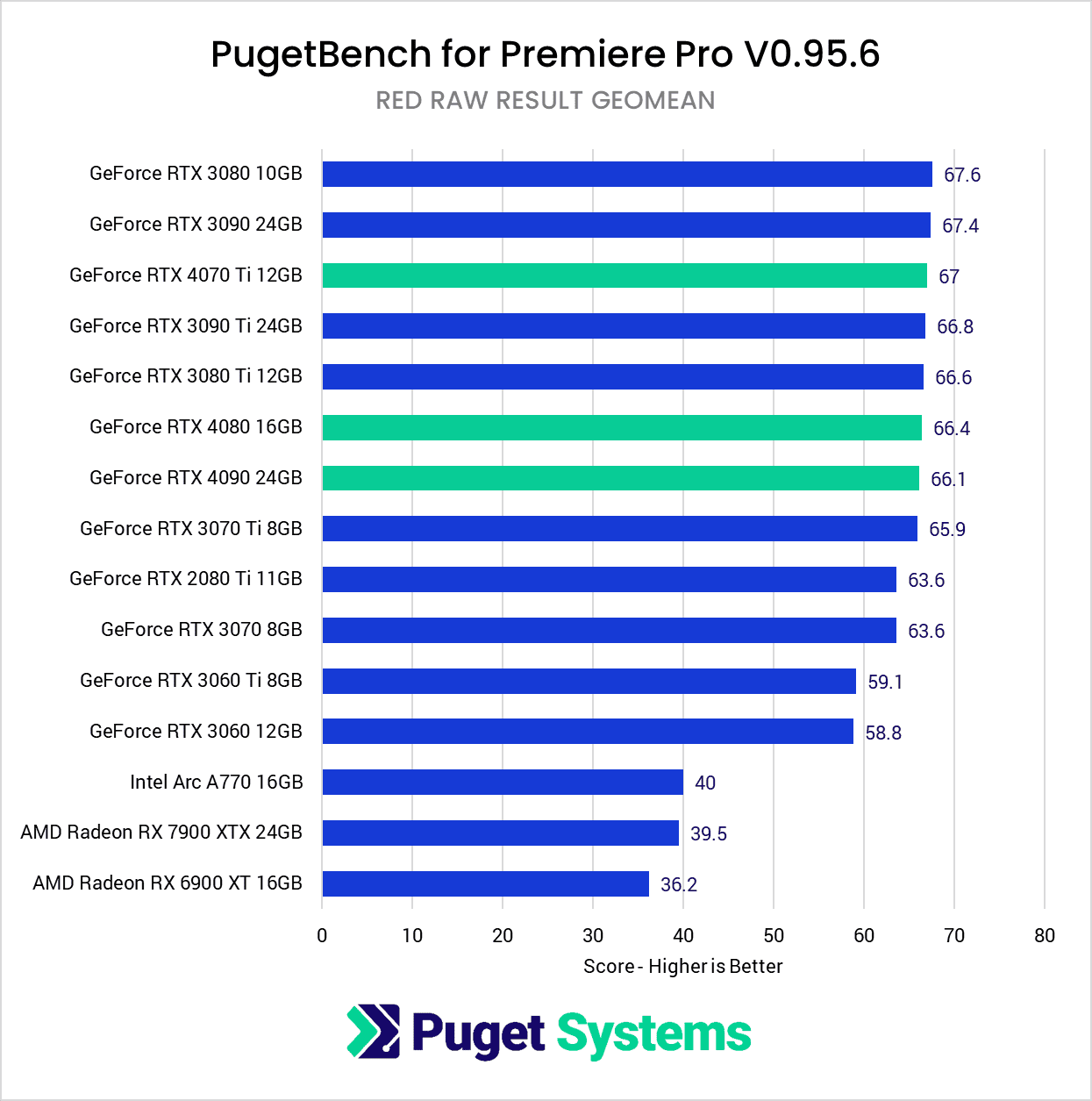

Lastly, chart #4 shows the performance when debayering RED RAW footage. This is an interesting workload to look at, because there is not as much of a performance difference between the individual NVIDIA GPU models as you might expect. Where there is a difference, however, is how much faster NVIDIA is compared to AMD and Intel as a whole. As much as Premiere Pro is better at using Intel and AMD GPUs for longGOP codecs, NVIDIA is even better for RAW. For example, the RTX 4080 is a massive 68% faster than the Radeon 7900 XTX.

Full Analysis: Adobe Premiere Pro: NVIDIA GeForce RTX 40 Series Performance

Video/Photo AI Enhancement: Topaz AI Suite

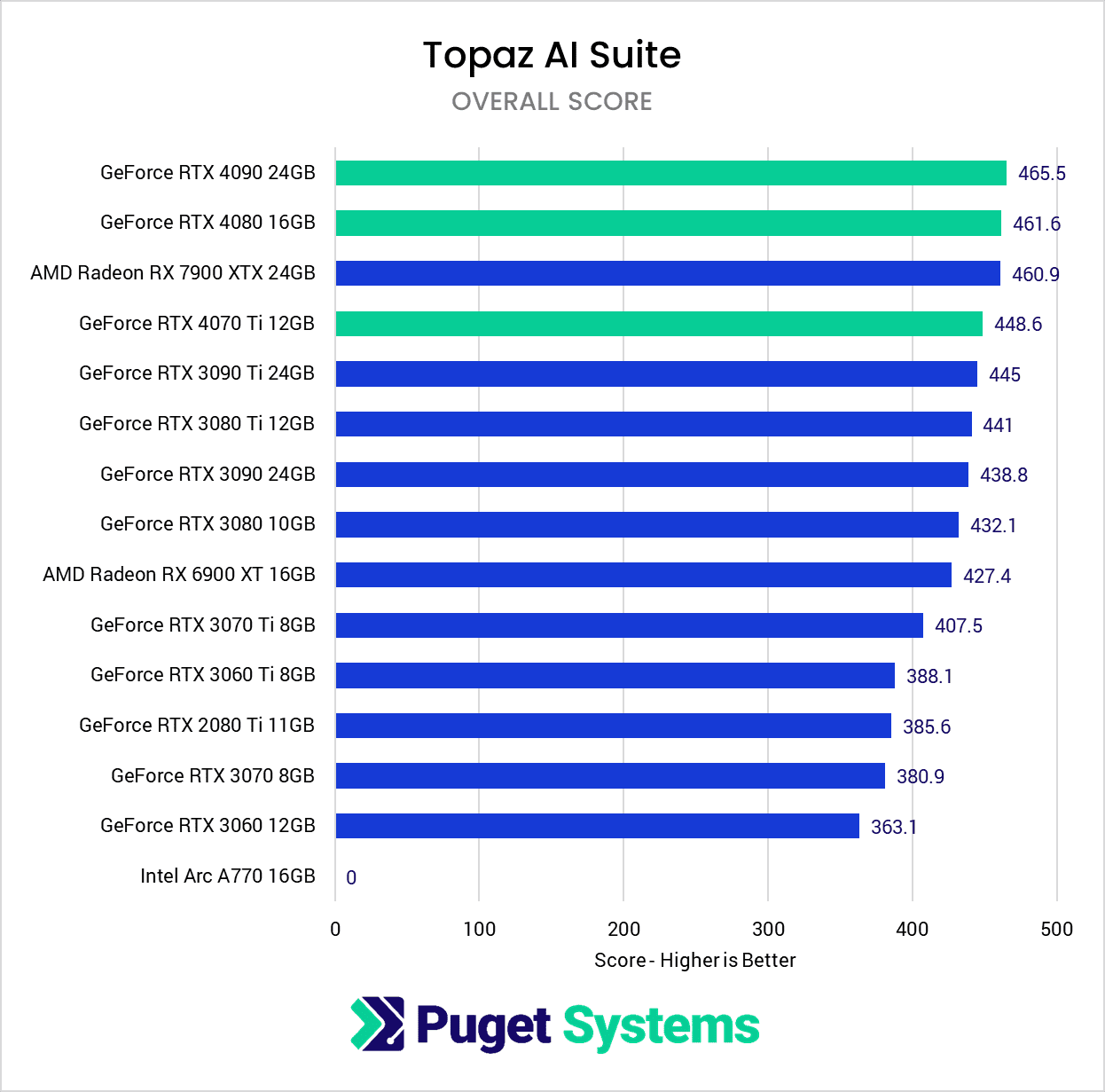

Topaz AI is fairly new to our testing, and we highly recommend checking out our in-depth article for information on what, and how, we are testing each of the applications in the Topaz AI suite. One of the big things to know about Topaz AI is that GPU performance is highly dependent on which application you are looking at. Video AI, Gigapixel AI, Sharpen AI, and DeNoise AI are the four we are focusing our testing on at the moment, and each behaves very differently.

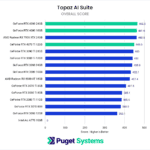

The Overall Score (chart #1) is the geometric mean of each app-specific score, but the performance per application shifts so much that if you use Topaz AI, we highly recommend looking at the individual results for the application you use.

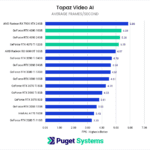

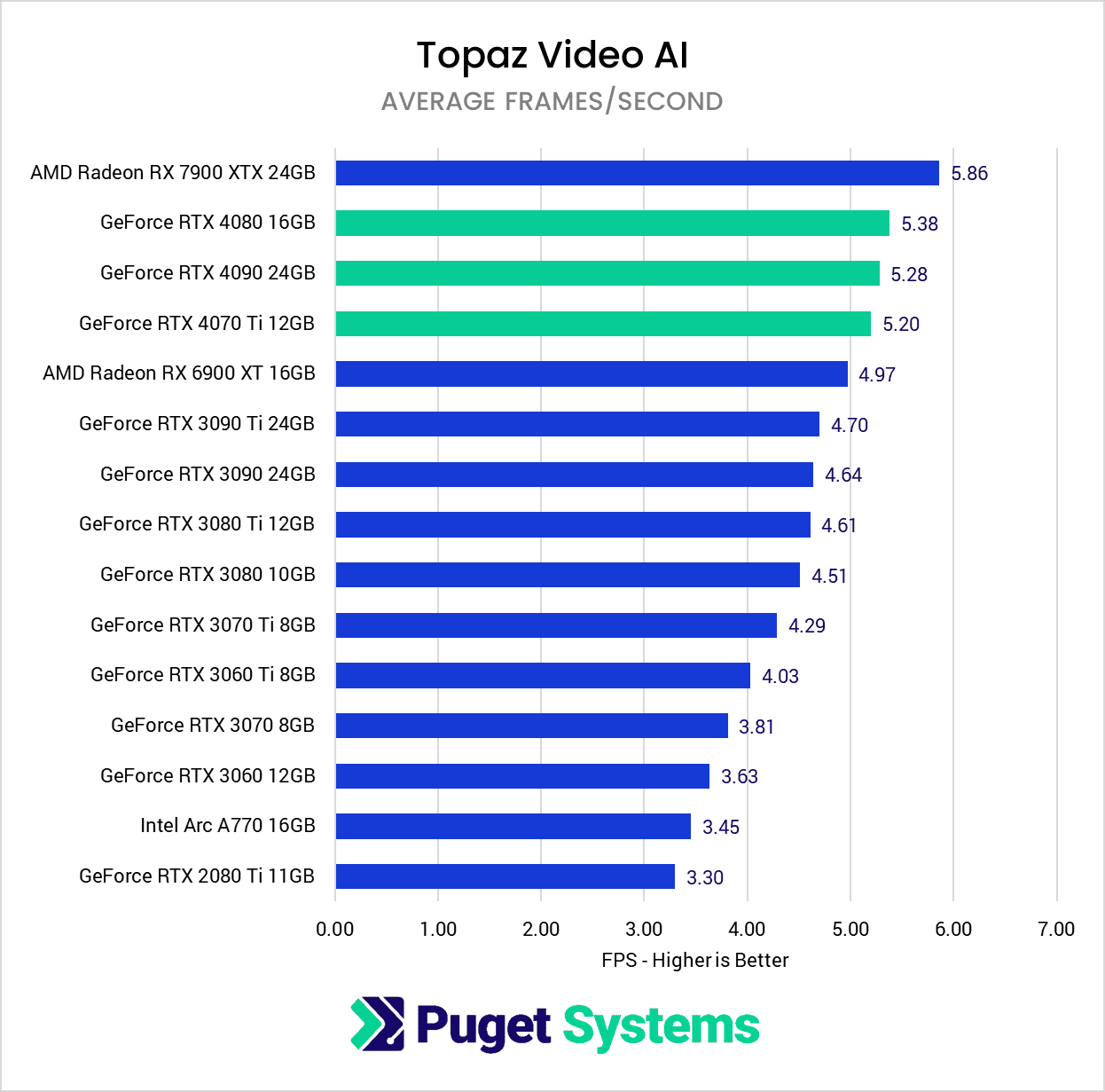

Topaz Video AI (chart #2) is the one we tend to get the most direct questions about, and for this application, the new RTX 40 Series GPUs are a solid ~15% faster than the previous generation RTX 30 Series cards. On the other hand, they are also solidly beaten by the AMD Radeon 7900 XTX, which came in at 9-13% faster than the RTX 40 Series. However, we will note that all these applications are often getting weekly updates, and some of the updates that have come out since we performed this testing are intended to improve performance specifically for NVIDIA GPUs. The updates are so frequent that we can’t keep delaying our articles to try to keep up with them, but it will be interesting to see how performance changes the next time we update our GPU articles.

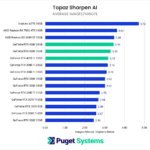

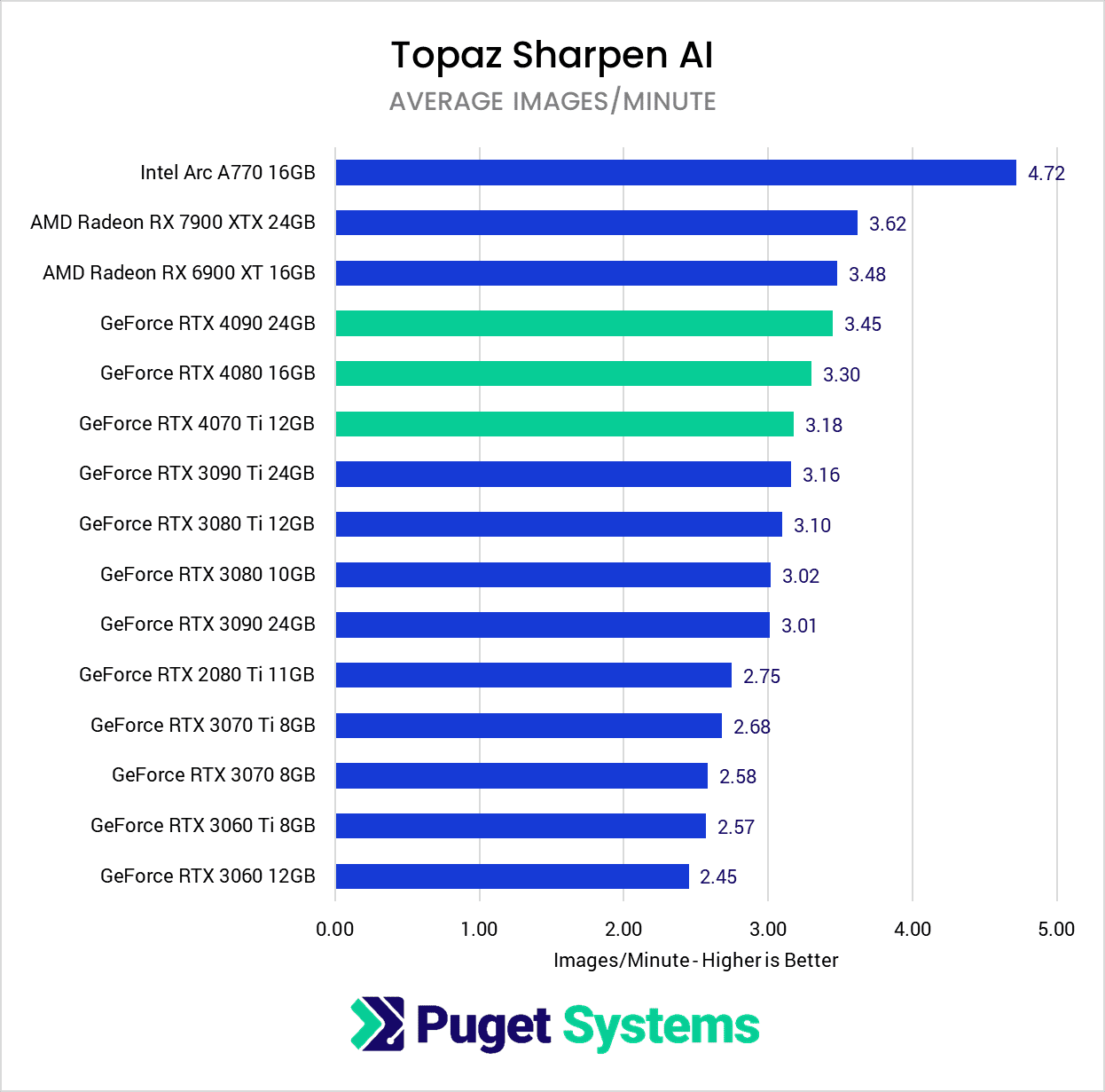

Beyond the Video AI application, there are also three photo-based applications. Topaz Sharpen AI (chart #3) is one that heavily favors the Intel ARC GPUs, with the A770 beating the Radeon 7900 XTX by 30%, and the RTX 4090 by 37%. The RTX 40 Series cards as faster than the previous generation for this application, but they are solidly behind AMD, not to mention Intel.

On the other hand, for Gigapixel AI (chart #4), the Intel Arc A770 couldn’t even finish our benchmark, consistently crashing with certain image formats. This is also the application where NVIDIA is strongest, with even the RTX 4070 Ti beating the Radeon 7900 XTX by a solid 22%.

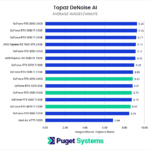

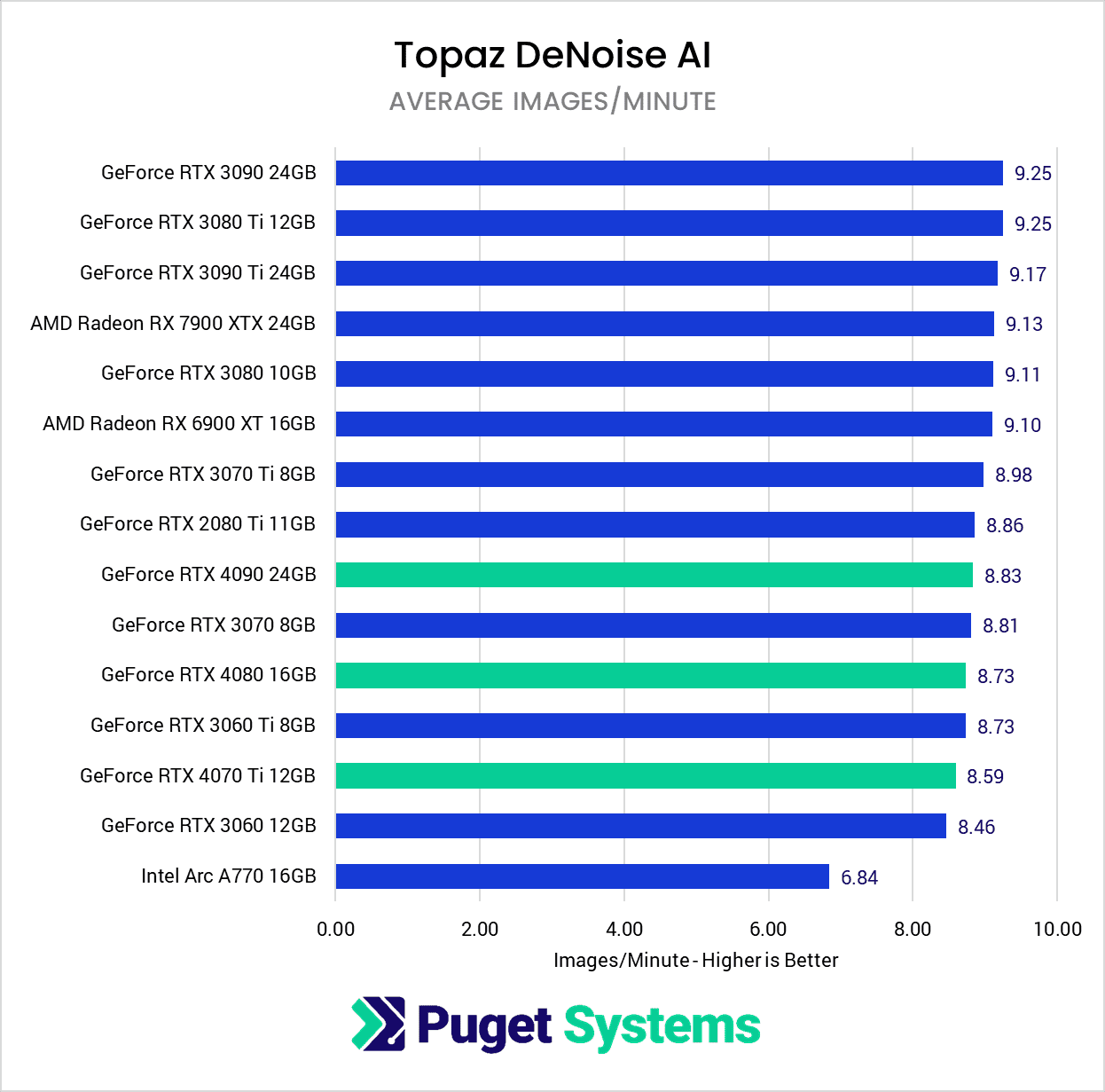

The final application we are currently testing is DeNoise AI (chart #5), although the GPU doesn’t make as much of a difference for that specific app. The big thing to point out here is that it is another area (similar to RAW performance in Premiere Pro and DaVinci Resolve) where the RTX 40 Series cards are a bit slower than the RTX 30 Series.

Full Analysis: Topaz AI Suite: NVIDIA GeForce RTX 40 Series Performance

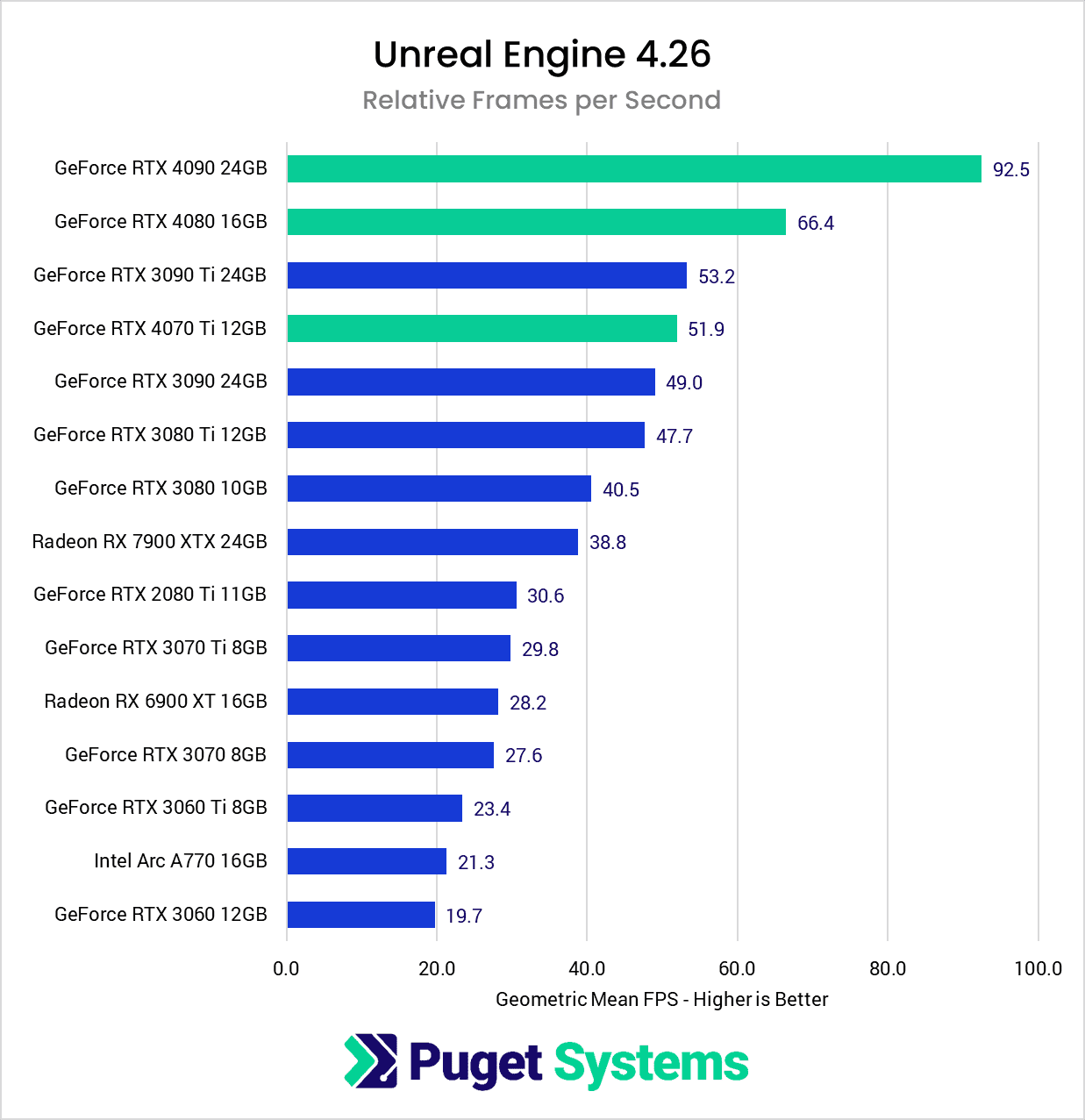

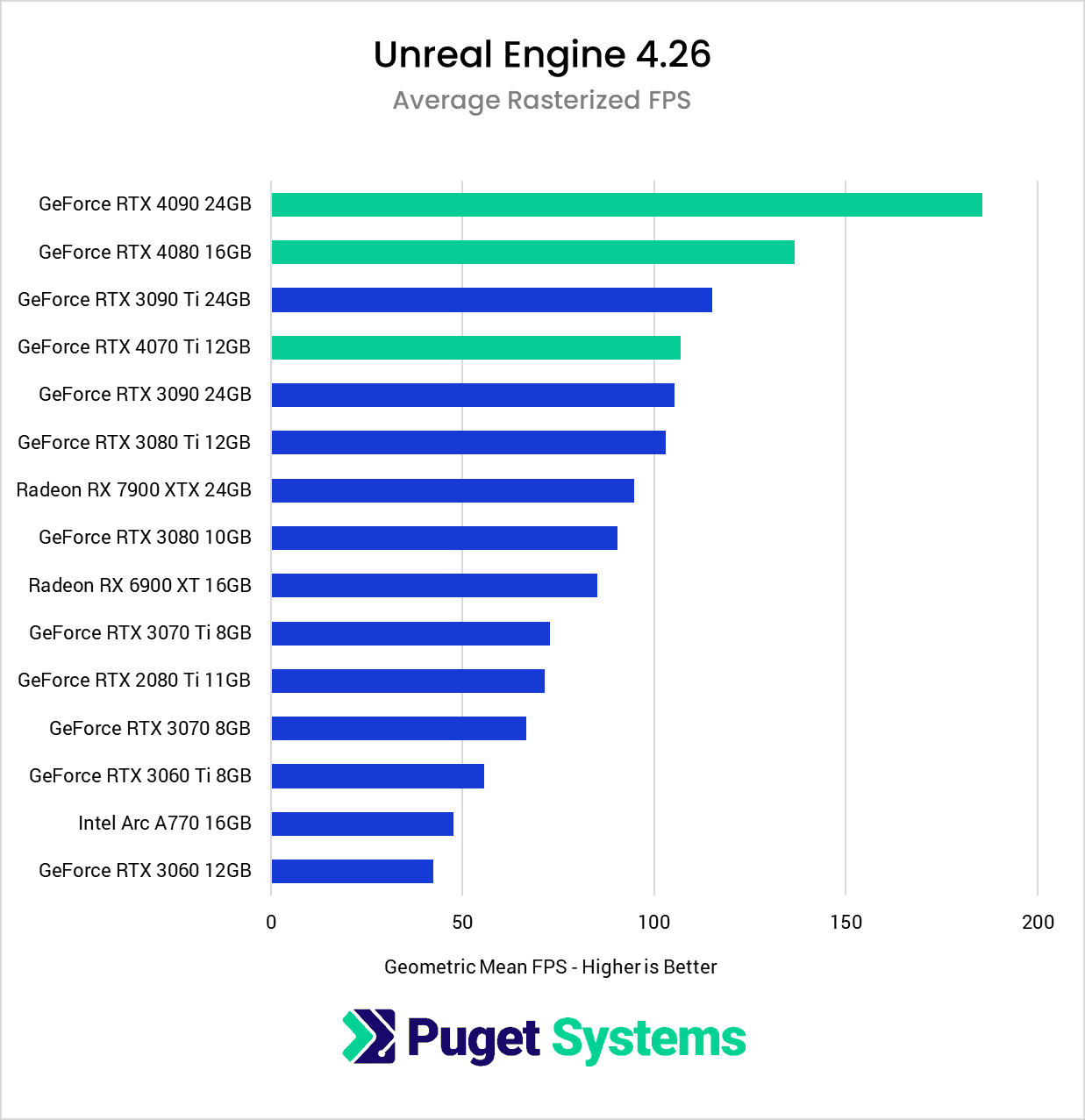

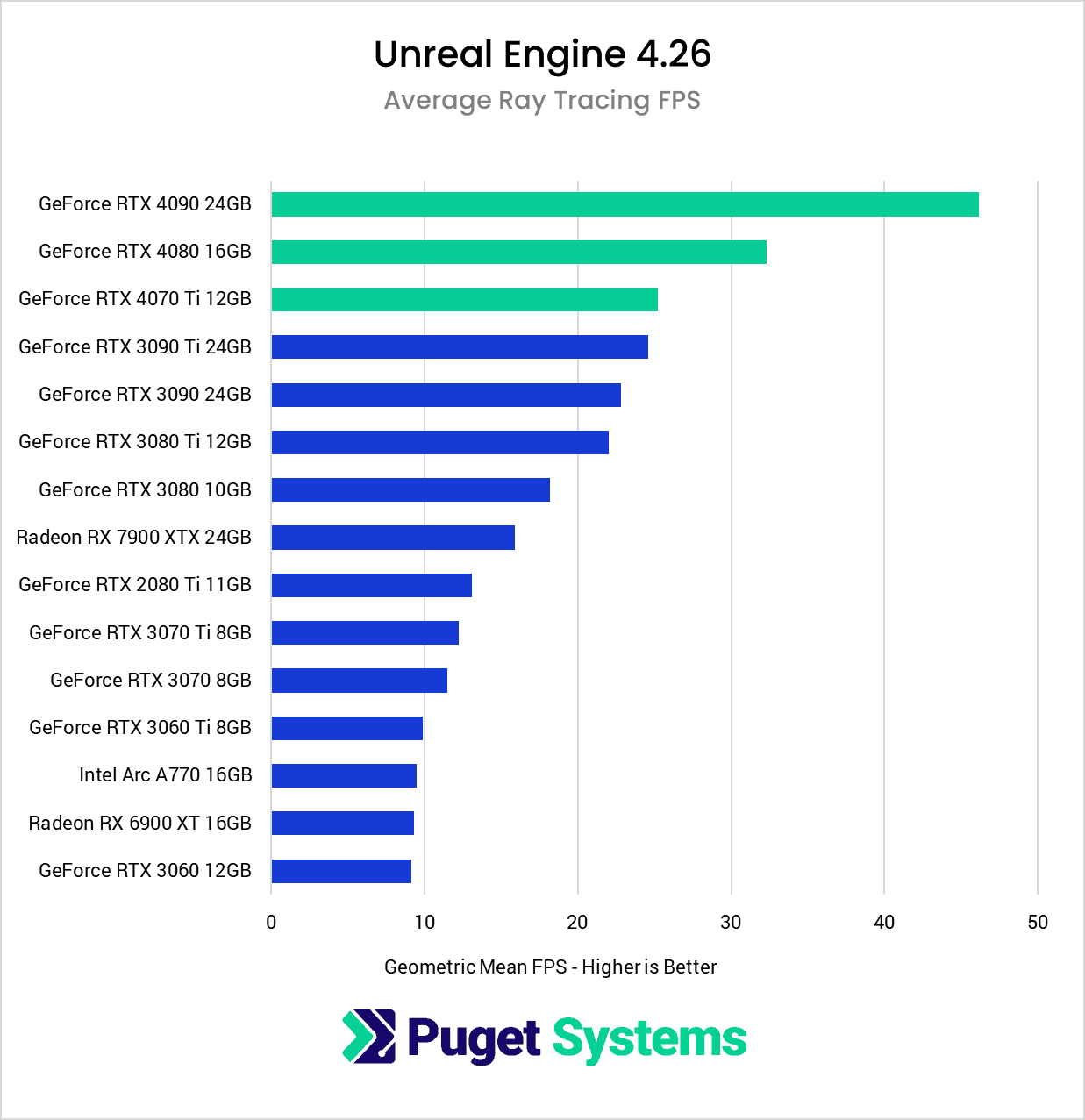

Game Dev/Virtual Production: Unreal Engine

In Unreal Engine, we test a variety of scenes with and without ray tracing to get a general picture of how different workflows will benefit from these new GPUs. The RTX 4090 is by far the overall winner, generating 90% more frames per second than the previous generation RTX 3090. Most professional workflows, be it Virtual Production or Game Development, are not looking for super high frame rates for their day-to-day work. This can be translated into being able to put more geometry and effects on screen while still maintaining their desired framerate.

The RTX 4080 is roughly 40% more powerful than the RTX 3080 Ti, while also upping the VRAM to 16GB. This will allow for bigger resolutions and more detailed environments in addition to the higher frame rates. The RTX 4070 Ti, on the other hand, is only 28% faster than the RTX 3080, which seems pretty small considering it is 14% more expensive.

Looking at the AMD side, the Radeon RX 7900 XTX is able to keep up with the more expensive RTX 4080 in rasterized workloads. This is quite impressive for AMD and a very welcome competition. However, when using ray tracing, the favored workflow for Virtual Production, the 7900 XTX can’t keep up. NVIDIA has a large headstart with this technology at the moment, but AMD is steadily improving.

AMD’s GPUs may not gain much ground in the Virtual Production fields, but their strong rasterized performance, plus their large 24GB of VRAM, will make for interesting options in the Game Development space.

Full Analysis: Unreal Engine: NVIDIA GeForce RTX 40 Series Performance

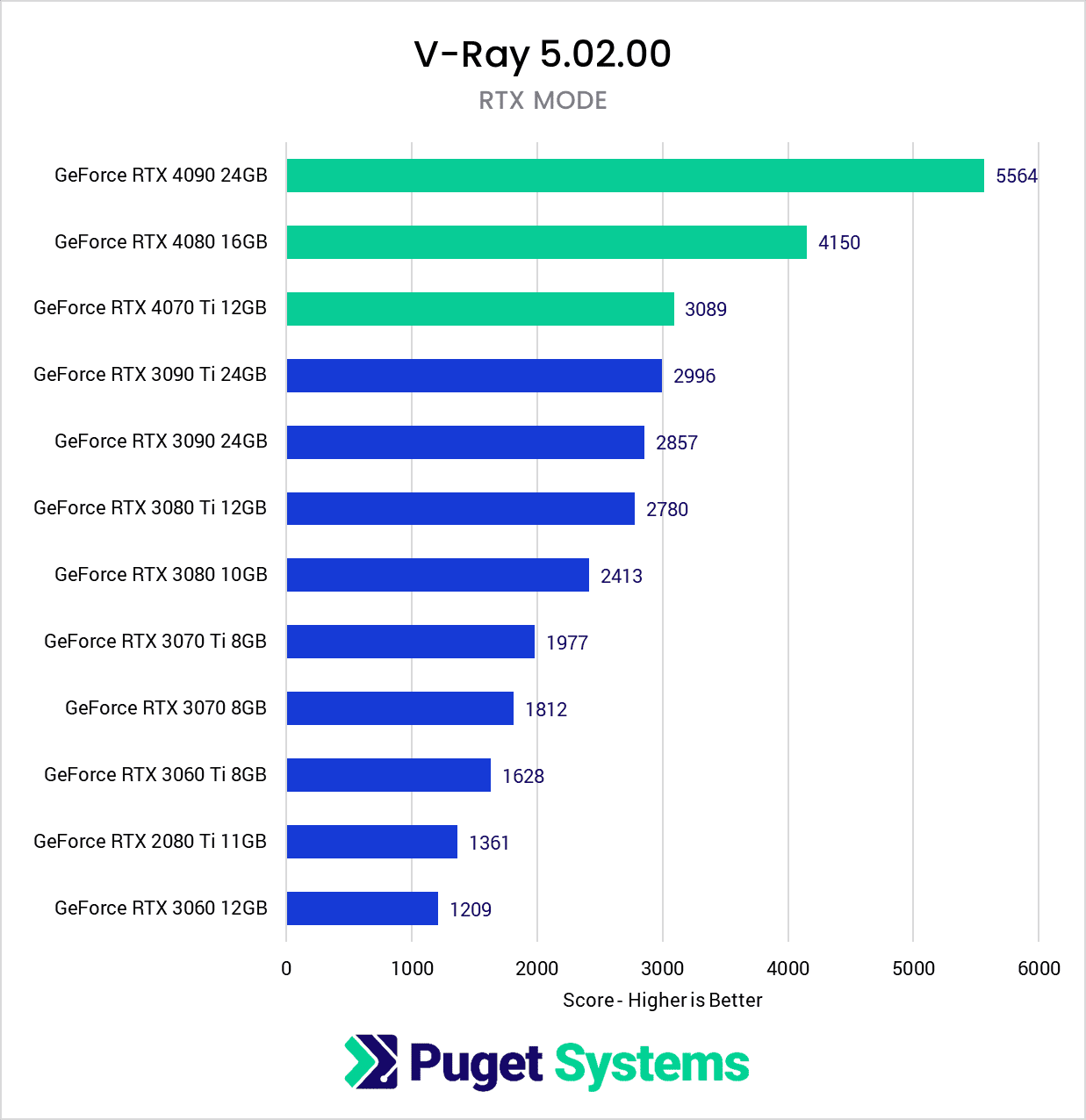

GPU Rendering: V-Ray

GPU rendering is, in many ways, the poster child for the RTX 40 Series, with the RTX 4090 24GB nearly doubling the score of the RTX 3090. It even outpaces the much more expensive RTX 3090 Ti, a GPU less than a year old. For those looking for the best-performing GPU for rendering, the RTX 4090 24GB is hands down the best option.

Moving on to the RTX 4080 16GB, it costs the same as the RTX 3080 Ti yet delivers 50% faster renders. It also increases the available VRAM from 12GB to 16GB, allowing for larger and more complex renders. Again, this is an excellent gen-over-gen improvement.

Lastly, the RTX 4070 Ti 12GB, which is $100 more than the RTX 3080 10GB (roughly 14% more expensive). It returns 28% faster rendering performance for that extra cost and brings an additional 2GB of VRAM. While this is still a welcome upgrade over the RTX 3080, it’s not nearly as impressive as the RTX 4090 and 4080. If we compare by product name, the new RTX 4070 Ti is 56% faster than the RTX 3070 Ti but also 33% more expensive. However we compare it, the RTX 4070 Ti provides more performance than the cost increase, but still not as impressive as its more powerful siblings.

Full Analysis: V-Ray: NVIDIA GeForce RTX 40 Series Performance

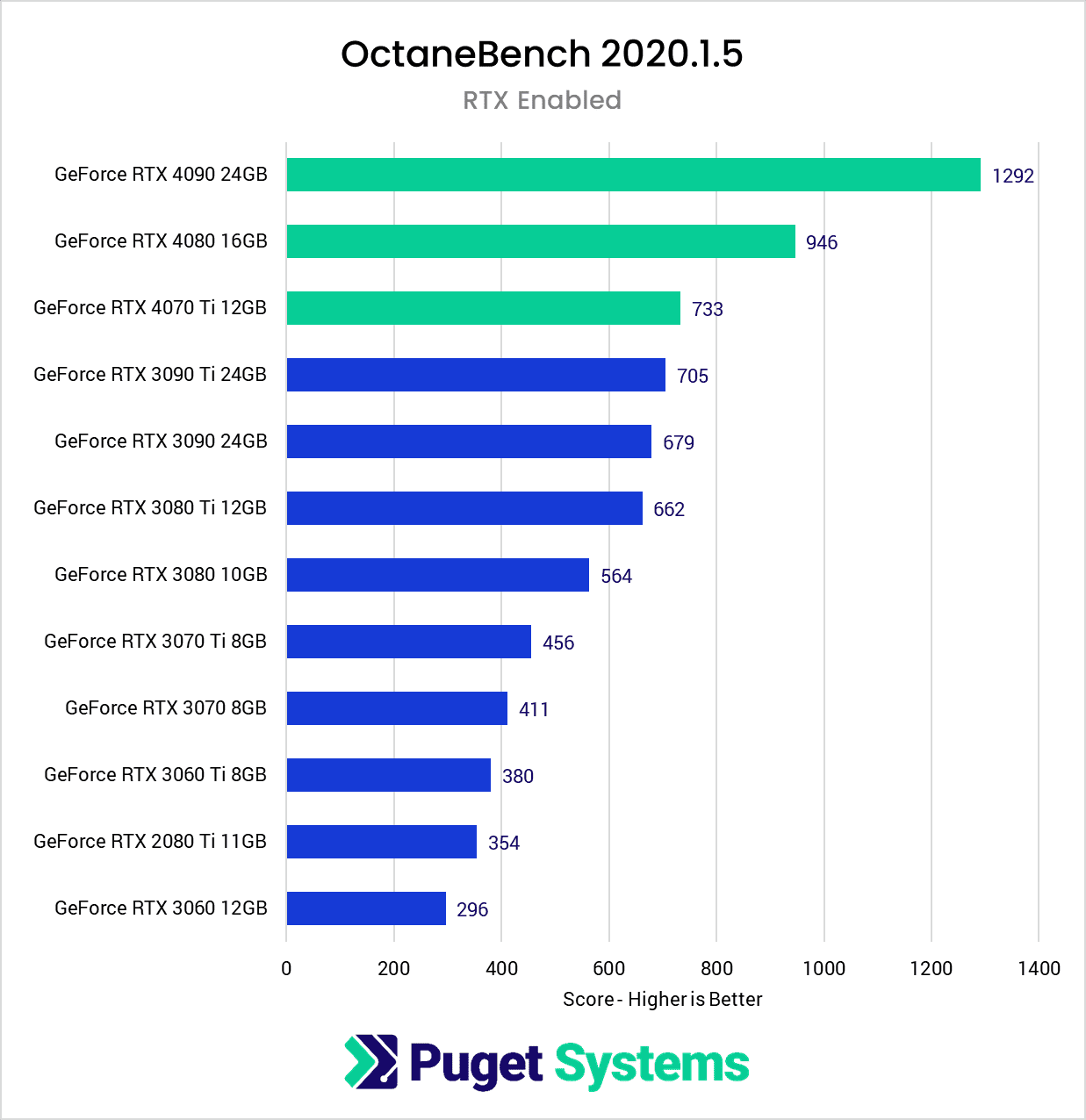

GPU Rendering: Octane

Like we saw in V-Ray, the RTX 40 Series does fantastic in this GPU rendering engine. With the RTX 4090 24GB coming in at 90% faster than the RTX 3090, there is no comparison for GPU rendering hardware. The RTX 4080 16GB is not quite as impressive, but still 43% faster than the RTX 3080 Ti for the same price. This seems almost small compared to the 4090, but is still a very welcome improvement. This also brings an additional 4GB of VRAM, something that every Octane user will appreciate.

The newest card to the RTX 40 Series lineup – the RTX 4070 Ti – is a modest 30% faster than the closest GPU from the previous generation in price, the RTX 3080. Because the 4070 Ti is 14% more expensive, this 30% performance increase seems fairly minor, but it does also bump the VRAM up to 12GB. If we compare it to the 3070 Ti, the new model is 61% faster but also 33% more expensive. Again, these are all good improvements, just nothing like we see with the 4090.

Full Analysis: Octane: NVIDIA GeForce RTX 40 Series Performance

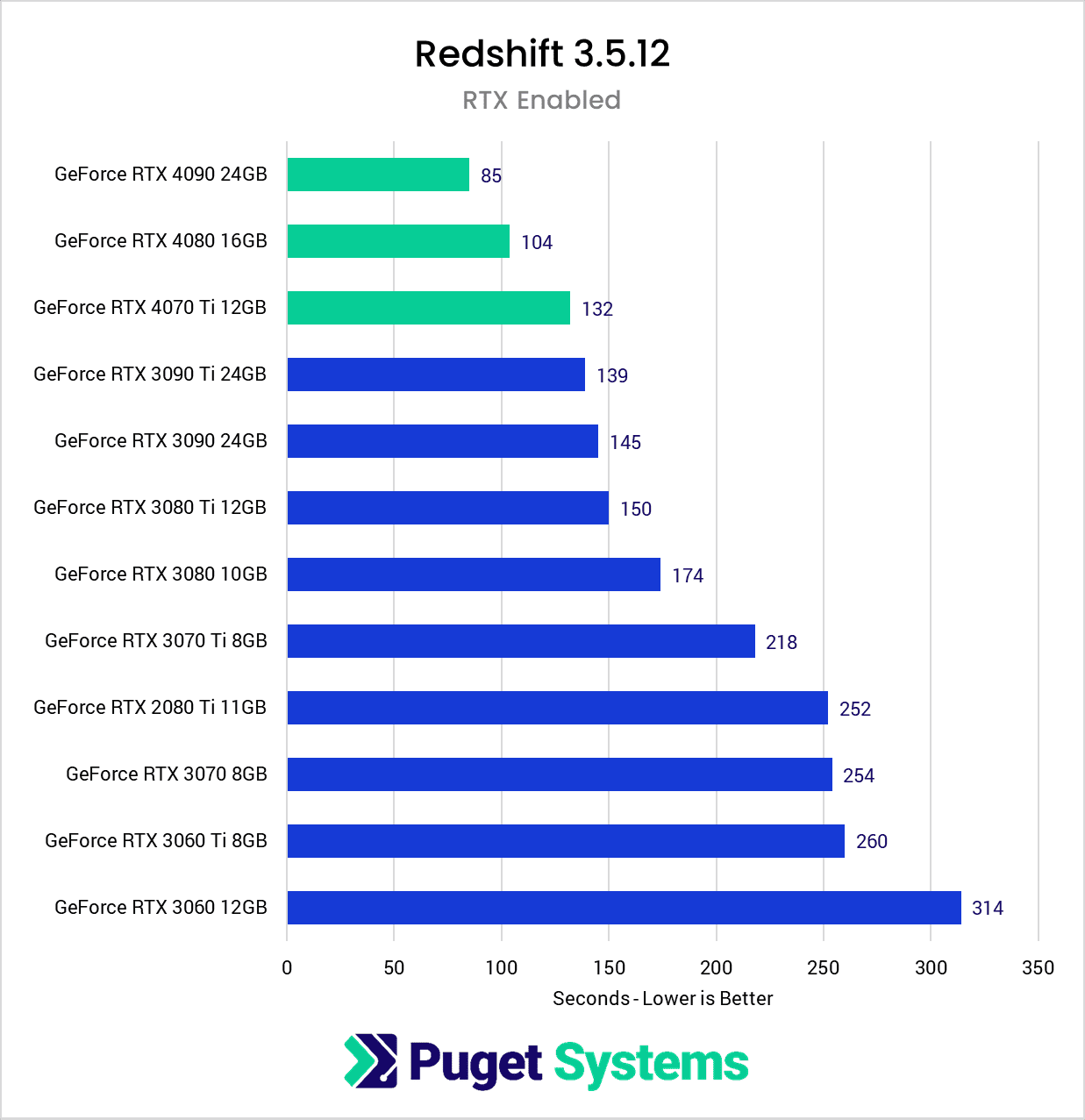

GPU Rendering: Redshift

Using the built-in Redshift Benchmark echoes what we’ve seen with the other GPU rendering benchmarks but to a lesser extreme. The RTX 4090 is 71% faster than the RTX 3090, which, while this seems lower than other results, is most likely due to a limitation of the Redshift benchmark. It renders a single frame and reports how long it takes to complete, only the time needed to complete is getting so quick that we may be running into an artificial barrier. Nonetheless, a 70% improvement over the previous generation is quite impressive.

The RTX 4080 sees a 44% improvement, while the 4070 Ti posts a 32% faster render. Bother are modest results for this type of workload, but still welcome, especially since both GPUs get extra VRAM than the previous generations.

While we only looked at rendering in Redshift, these results should be echoed by the new dynamics system in Cinema4D. Cloth, rope, and particle simulations should be much faster with this generation.

Full Analysis: Redshift: NVIDIA GeForce RTX 40 Series Performance

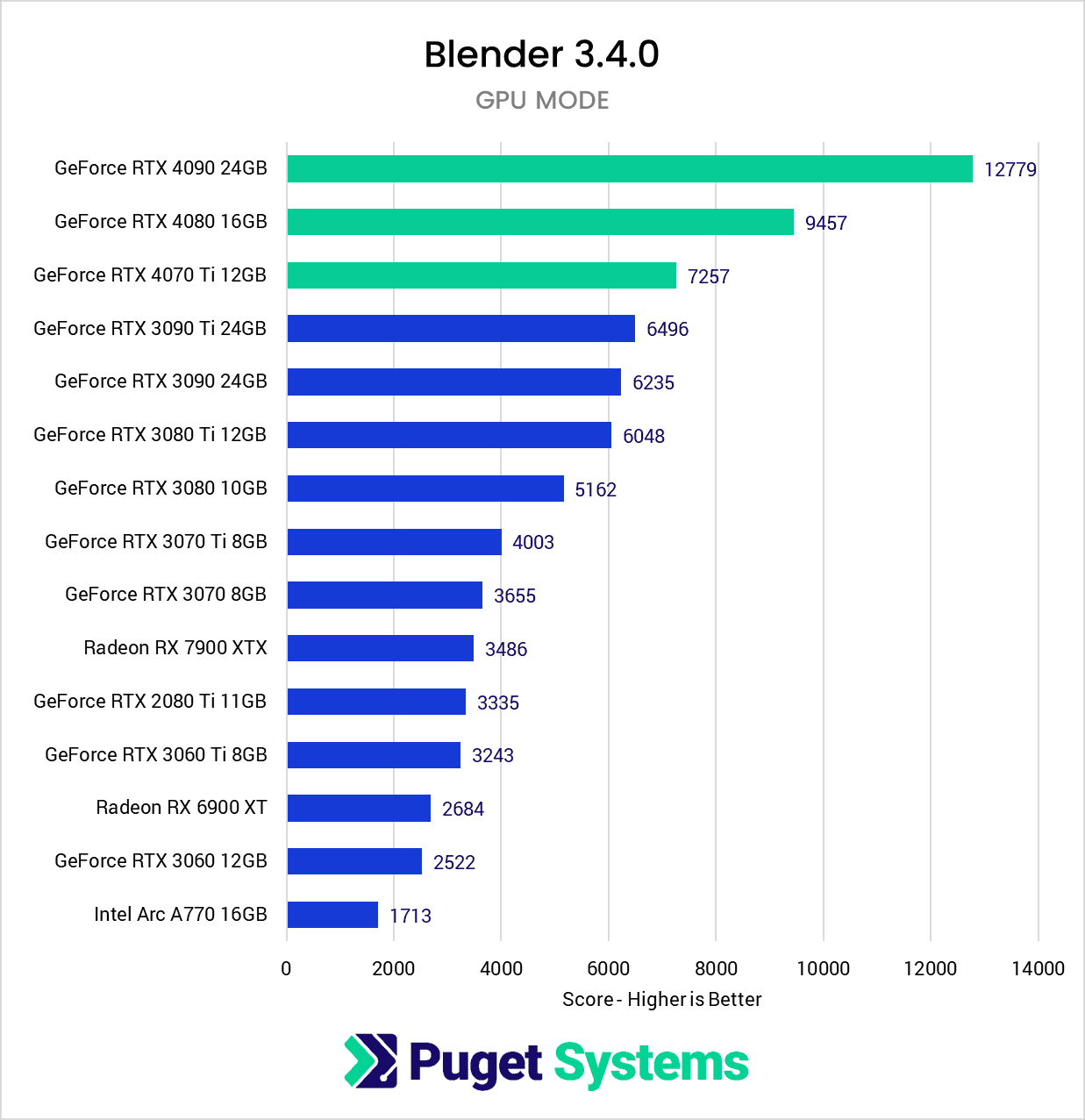

GPU Rendering: Blender

Blender is one of the few GPU renderers available that supports both NVIDIA and AMD. NVIDIA has long dominated the rendering space, and their CUDA and Optix APIs have made for some very powerful GPU-based renderers. But for Blender, we have the option to use either NVIDIA, AMD, or even Intel GPUs.

Starting with the RTX 4090 24GB, it delivers remarkable results in terms of rendering performance, outpacing the RTX 3090 by 97% and even doubling the score of the RTX 3090 for only a 7% price increase. For content creators seeking the ultimate rendering performance, the RTX 4090 24GB is the top choice.

The RTX 4080 16GB is another standout GPU, providing 56% faster rendering performance than the RTX 3080 Ti, while also increasing the available VRAM from 12GB to 16GB, making it an excellent gen-over-gen improvement.

The RTX 4070 Ti 12GB, although not as impressive as its powerful siblings, still offers 40% faster rendering performance for the extra 14% cost increase compared to the RTX 3080 10GB. With 2GB additional VRAM, the RTX 4070 Ti provides more performance relative to the cost and outperforms the RTX 3070 Ti. However, it falls short compared to the impressive results of the RTX 4090 and 4080.

Looking at the AMD RX 7900 XTX, we see good improvement over the previous RX 6900 XT, but they are still lagging behind NVIDIA. Blender only recently added HIP support, and AMD still has a long way to go in the GPU rendering industry, but its great to see that there is still some competition out there.

Full Analysis: Blender: NVIDIA GeForce RTX 40 Series Performance

How Well Do the NVIDIA GeForce RTX 40 Series Perform for Content Creation?

NVIDIA has long been our go-to choice for most content creation workflows, and the RTX 40 Series largely continue that trend. However, while these new cards are stellar for GPU-heavy workflows like rendering, there were a few cases where we found that either AMD or Intel GPUs can be faster, and a few cases where the RTX 40 Series cards were actually a bit slower than the previous generation RTX 30 Series cards.

Starting off with video editing, the RTX 40 Series cards are great for many GPU-focused tasks like processing GPU-accelerated effects. Working with longGOP footage (H.264/HEVC) can be hit or miss, with NVIDIA giving terrific performance in Resolve, but AMD and especially Intel taking a big lead in Premiere Pro. If needed, this can be offset by using an Intel Core CPU with Quick Sync, so at least that inconsistent showing can be offset. RAW media, however, seems to have an issue with the RTX 40 Series cards, as they consistently showed slightly lower performance (on the tune of about 5%) than the previous generation RTX 30 Series cards.

It is worth pointing out that inconsistent showings like this aren’t limited to NVIDIA. For example, the AMD Radeon 7900 XTX is terrific when working with RAW footage in DaVinci Resolve, but terrible at handling the same footage in Premiere Pro. This highlights how important it is to examine benchmark results for the specific application you are using and not rely on either generic benchmarks or benchmarks for other applications – no matter how similar they are in terms of industry or workflow.

In addition to our video editing testing, we also added a new benchmark this time around for the Topaz AI suite. These applications utilize artificial intelligence (AI) to enhance photos or videos for things like upscaling, frame interpolation, sharpening, or noise removal. Unfortunately, even though these applications are all made by the same developer, relative GPU performance can change dramatically depending on which application you are looking at. Overall, AMD holds a small lead over the new RTX 40 Series cards for many applications (including Video AI and Sharpen AI), but NVIDIA is significantly better for Gigapixel AI. This disparity means that, again, you need to look at the results for the specific applications you use to determine whether the RTX 40 Series cards are a good choice for your workflow.

Unreal Engine is an application we are currently seeing a lot of growth for, with it being used not only for game development but also for virtual production and VFX. Being a real-time engine, it benefits more from powerful GPUs than many of the other applications we test, which makes it critical to get a reliable, high-performance card. The RTX 40 Series cards some major improvements in real-time performance, especially at the top end where the RTX 4090 is around 90% faster than the previous generation. This means those in virtual production or VFX will be able to do even more in their scenes than ever before. AMD is beginning to compete in this industry, but while the Radeon RX 7900 XTX shows some great rasterized performance, it still lags behind using ray tracing.

GPU rendering sees up to a 95% (nearly 2x) improvement in speeds across all applications tested on the RTX 4090. Cutting your render times in half is a huge win for anyone in this space. The lower-end models aren’t quite as crazy, although the RTX 4080 still averaged 49% (1.5x) higher performance while also bringing more VRAM; something all users will appreciate. The RTX 4070 Ti was relatively less impressive, with only a 32% performance improvement over the previous generation. Given the price increase for this model, this isn’t much of an upgrade if you already own an RTX 3080, but can still be a big boost if you have an RTX 20 Series or older GPU.

Overall, there are a few workflows where the RTX 40 Series appear to have some minor performance bugs, but there are even more areas that they are absolutely terrific. Because of this, we continue to give NVIDIA the “best GPUs for content creation” crown, but it is always worth it to examine benchmark results for the exact applications you use, as there are always nuances to be found.

If you are looking for a workstation for any of the applications we tested, you can visit our solutions page to view our recommended workstations for various software packages, our custom configuration page, or contact one of our technology consultants for help configuring a workstation that meets the specific needs of your unique workflow.