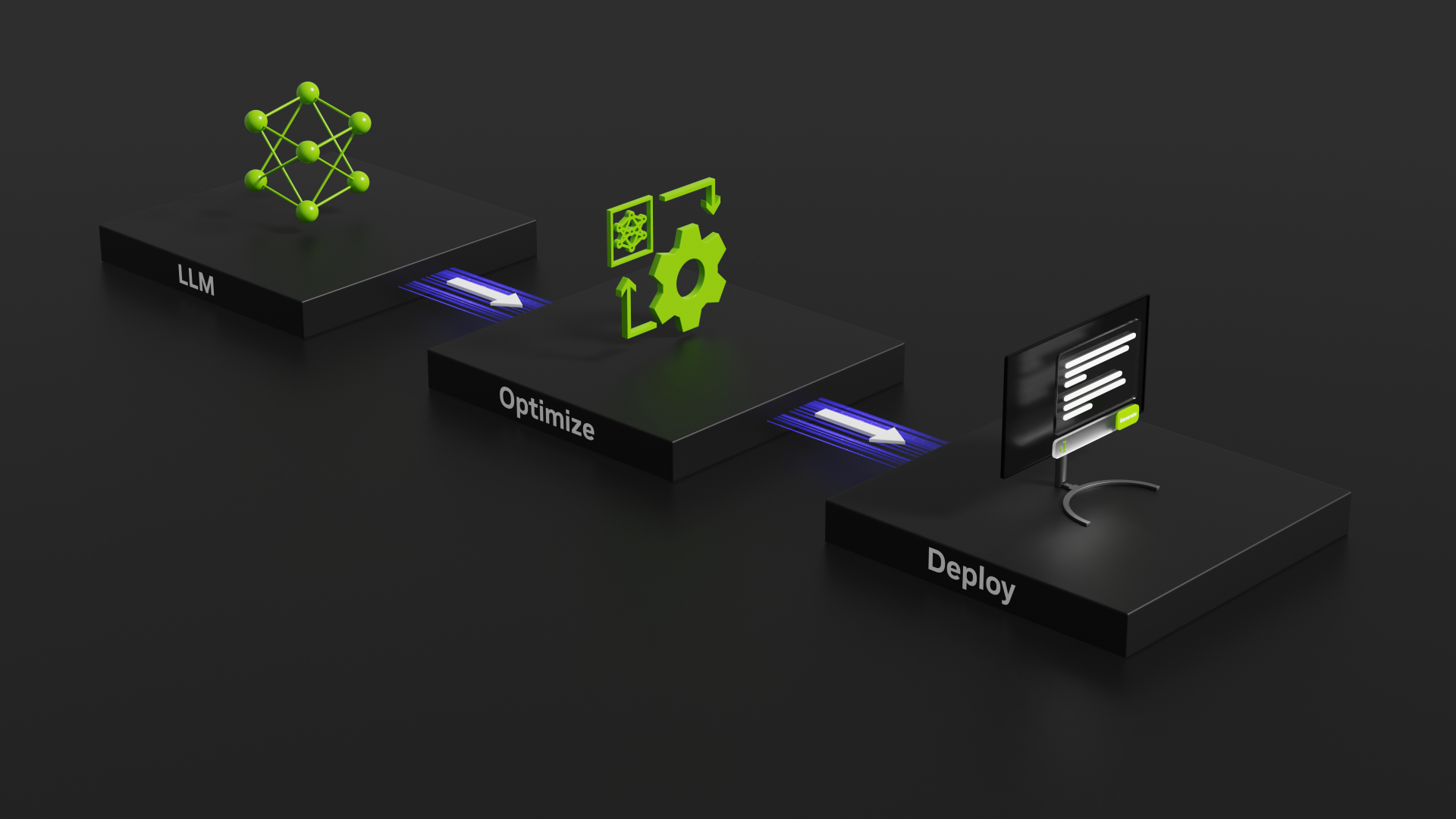

Evaluating the speed of GeForce RTX 40-Series GPUs using NVIDIA’s TensorRT-LLM tool for benchmarking GPU inference performance.

Evaluating the speed of GeForce RTX 40-Series GPUs using NVIDIA’s TensorRT-LLM tool for benchmarking GPU inference performance.

Results and thoughts with regard to testing a variety of Stable Diffusion training methods using multiple GPUs.

NVIDIA GTC 2023 was outstanding! To say that about a virtual conference tells you how much I value it. This post is largely a catalog of the talks I found interesting along with titles that I think will be interesting to a larger audience and my colleagues at Puget Systems.

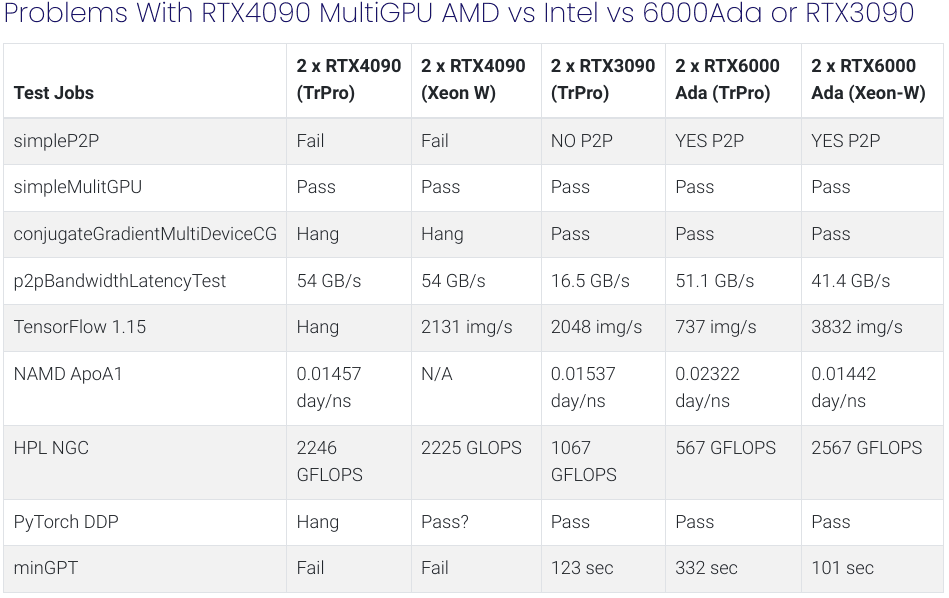

I was prompted to do some testing by a commenter on one of my recent posts. They had concerns about problems with dual NVIDIA RTX4090s on AMD Threadripper Pro platforms. I ran some applications to reproduce the problems reported above and tried to dig deeper into the issues with more extensive testing. The included table below tells all!

This post presents preliminary ML-AI and Scientific application performance results comparing NVIDIA RTX 4090 and RTX 3090 GPUs. These are early results using the NVIDIA CUDA 11.8 driver.

This is just a short post to announce a more usable version of the NVIDIA GPU powerlimit setup script that I released a few months ago. This update to version 0.2 uses an interactive mode to set GPU powerlimits and optionally setup a systemd unit file to set these limits on subsequent reboots.

We have a new collection of GPU accelerated Molecular Dynamics benchmark packages put together for GROMACS, NAMD 2, and NAMD 3-alpha10. (The benchmark packages will be available to the public soon.) In this post we present results for,

– 3 applications: GROMACS, NAND 2 and NAMD 3alpha10,

– 8 MD simulations,

– 12 different NVIDIA GPUs,

– 96 total results.

In this post we look at using a testing Lab of Windows systems as a benchmarking platform for Linux scientific application using network boot with nfsroot and home mounts. Linux is boot on the systems “diskless” leaving the Windows installs untouched. LTSP turned out to be a great time saver for setting up the configuration.

This post presents testing data showing that power-limit reduction on NVIDIA GPUs have give significant benefits for both high wattage and lower wattage GPUs. Power-limit vs Performance data is presented for 1-4 A5000 and 1-4 RTX3090 GPUs.

In this post I am referencing a Bash shell script I recently put together for setting up automatic NVIDIA GPU power-limit lowering at system boot. This allows a reliable way to configure and maintain multi-GPU systems for stable operation under heavy load.